State Of The Nation On AI Regulatory Landscape

Daniel Garcia

When it comes to emerging technologies, regulators often take a reactive approach – however, this is not necessarily the case for AI.

Across the world, countries and business are starting not only to implement controls around AI and its usage, but also to understand the potential risk implications in the short and medium term.

The EU is the first jurisdiction to define rules to govern AI. The AI Act was approved by the EU parliament on March 13th, and is expected to be ratified by all 27 members before summer 2024.

The EU AI Act is centred on the level of risk or hazard that AI poses to the public. The aim is for organizations promoting this technology – whether private or public – to be more transparent about usage, define mechanisms on how it will be governed, and manage potential misuses or biases.

However, a risk-based approach is not the only means for a call to action. Looking at a global scale, different jurisdictions are considering the governance of AI technology from different angles:

- Focus on ethical use.

These guidelines often focus on principles such as transparency, accountability, fairness and inclusivity. - Protecting data and data privacy.

Considering the nature of this technology, AI leans on collecting vast amounts of data, and for this reason different regions have expressed concerns related to the collection, processing and storage of personal data. - Corporate responsibilities.

There is a discussion around issues of accountability over the potential for harm from decision-making based on model biases. - AI governance.

As with any other models employed, firms will be required to establish comprehensive frameworks to govern this technology. Transparency, accountability and auditability are key talking points. - Industry-specific regulations.

Considering the overall gap in terms of national regulations, some industries – such as healthcare and the financial industry – are beginning to implement some of their own guidelines. - Global vs national legislation.

Considering the technology’s implications, there are some initiatives to coordinate legislation across different countries and regions; however, we are still at early stages in this international cooperation.

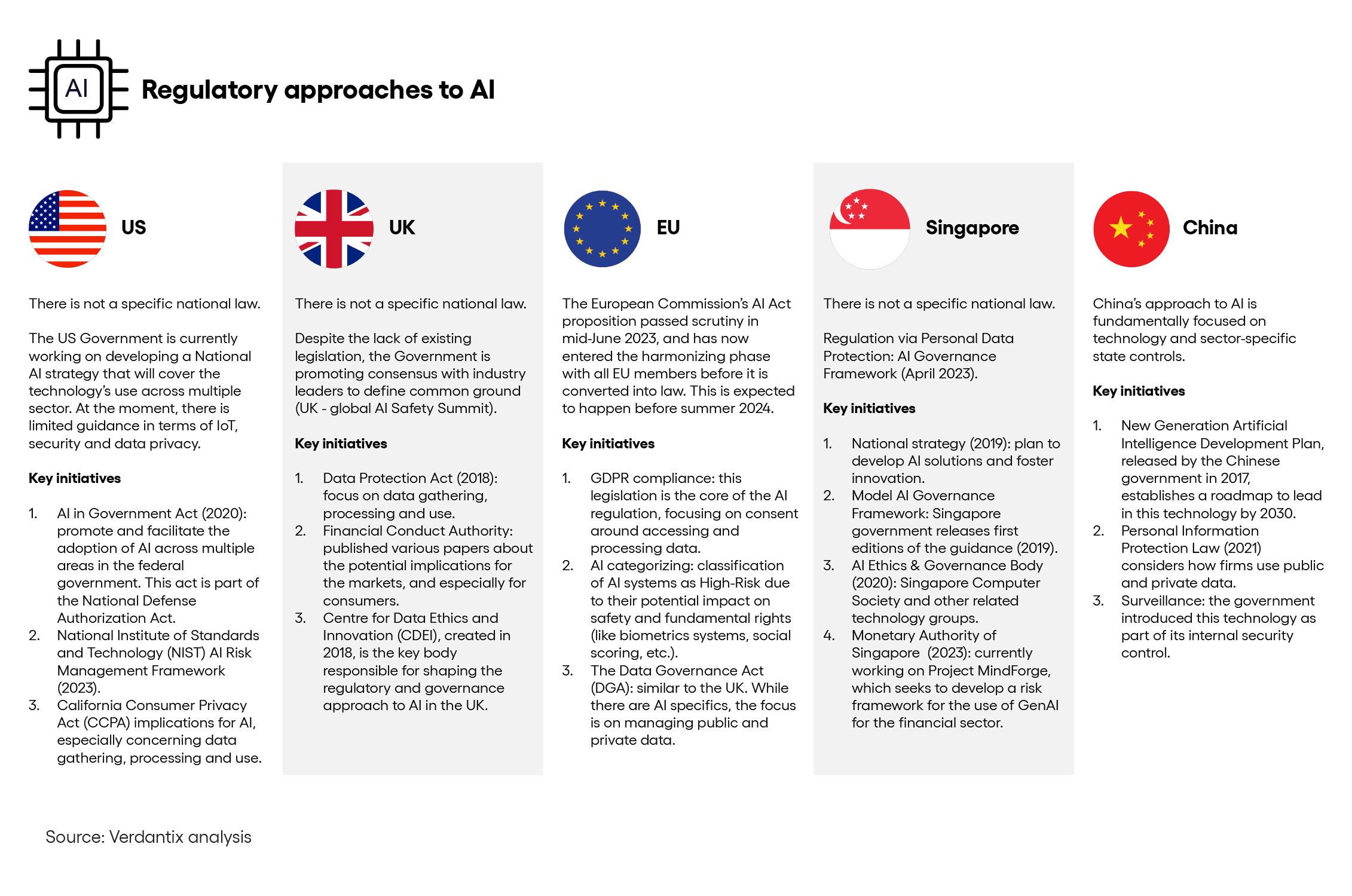

Global AI regulations

Different countries are taking different approaches to AI governance. Considering the complexity of this topic, some regions are beginning to consider the implications of AI to their data protection legislation or similar frameworks, in order to protect end users.

When looking at global initiatives, there are five regions/countries that are actively working on developing comprehensive frameworks:

We expect that other countries and regions will follow the EU’s lead in defining specific guidelines for the governance of artificial intelligence. However, these kinds of frameworks will still take some time to be fully drafted and implemented.

Firms should get ahead of, and take the initiative to evaluate, the potential implications of AI, following best industry practices on data management, consumer protection, ethics and other elements, regardless of the pace at which global standards are moving.

About The Author

Daniel Garcia

Senior Manager