Inside OpenAI’s $1 Trillion Compute Bet: How The AMD Deal Is Redefining Data Centre Infrastructure

When OpenAI announced plans to deploy six gigawatts of AMD chips, the market focused on semiconductors. In truth, this is an energy and infrastructure story. The power required for this rollout places artificial intelligence on the same scale as national utilities, revealing the physical realities of building intelligence at global scale.

What is the scale and timeline of OpenAI’s AMD deal?

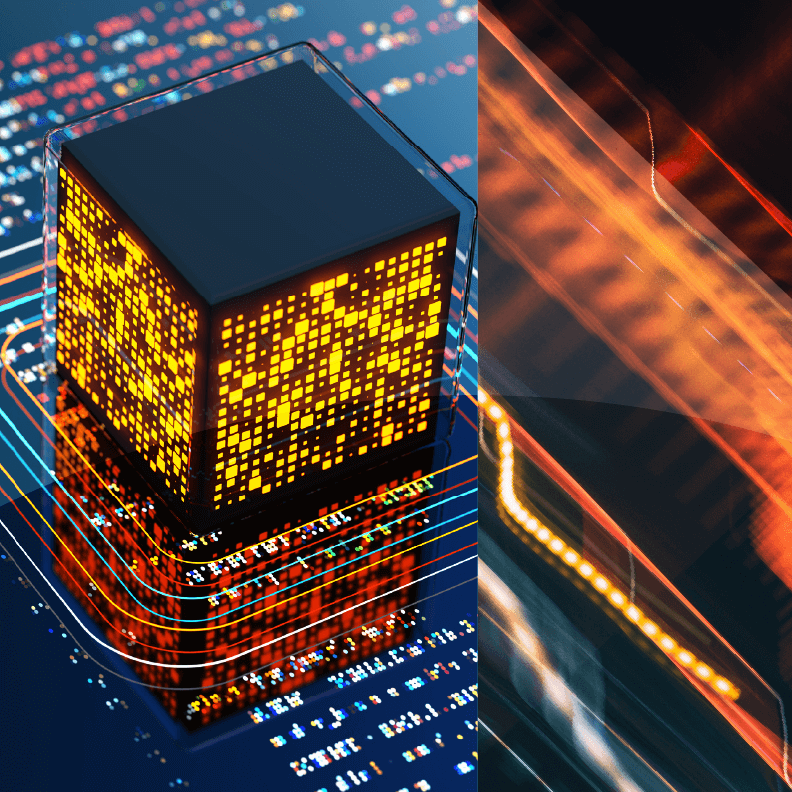

Announced in October 2025, the agreement commits OpenAI to deploying up to 6 gigawatts of AMD’s MI450 GPUs between 2026 and 2030. The first phase begins in late 2026 with one gigawatt of computing power, expanding gradually as new chip generations are released. Each gigawatt represents the equivalent of the output of a large power plant. When complete, the total capacity will equal around three times Kenya’s national peak electricity demand, showing just how energy-intensive AI infrastructure has become.

AMD estimates that each gigawatt of compute capacity costs tens of billions of dollars to develop, bringing the project’s total value to well above $100 billion. OpenAI will also receive warrants for up to 160 million AMD shares – around 10% of the organization – tied to performance milestones. The announcement has already reshaped AMD’s market position, with its share price climbing over 30% as investors recognize the scale of the partnership.

How does this fit into OpenAI’s wider infrastructure strategy?

The AMD agreement builds on OpenAI’s existing $100 billion deal with NVIDIA for an additional ten gigawatts of compute power, confirming the firm’s strategy to diversify chip supply while expanding capacity at record pace. Together, these projects form one of the most ambitious private infrastructure programmes ever attempted.

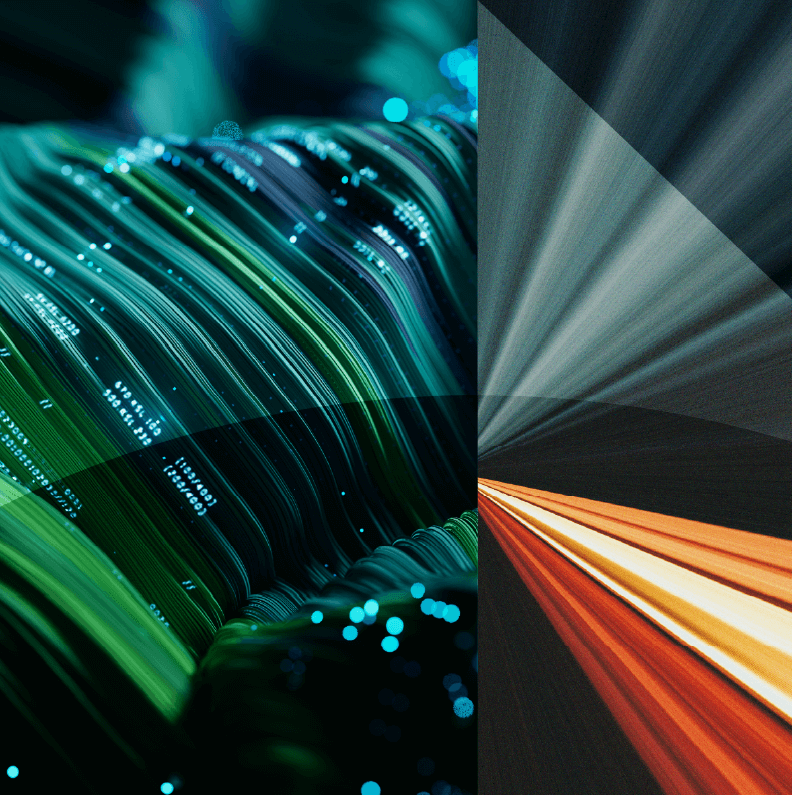

Over the past decade, data centres have evolved from background IT utilities into strategic infrastructure. Cloud computing laid the foundation, but artificial intelligence has redefined the economics. Each new generation of models demands greater power density, advanced liquid-cooling systems and high-voltage substations to maintain reliable supply. What was once about server management has become a large-scale engineering challenge linking technology, energy and sustainability.

What role will management software play in sustaining AI growth?

Managing facilities that consume gigawatts of electricity requires more than hardware expansion: it demands orchestration at every layer. Integrated IoT platforms and energy management systems now coordinate thousands of assets in real time, consolidating sensor data on temperature, power flow and cooling efficiency to give operators a continuous view of performance across the entire site. The various infrasoftware products that used to be called DCIM are now evolving into more sophisticated, unified data centre management platforms, bringing together power, cooling and workload optimization under a single intelligent layer.

To maintain this delicate balance, intelligent automation is stepping in. Embedded AI control systems analyse operational information as it streams in, fine-tuning workloads and cooling output to sustain optimal conditions. Digital twins recreate entire facilities in virtual form, enabling engineers to simulate design changes and anticipate stress points before implementation. Predictive analytics detect emerging risks early, while optimization engines distribute energy dynamically based on demand and grid conditions.

Why does this matter for the future of AI?

Every gain in efficiency now carries outsized value. A single percentage point improvement in cooling performance can save millions of dollars annually and prevent thousands of tonnes of emissions by 2050. These software, sensors and simulation technologies are becoming as vital to AI progress as the chips themselves.

As artificial intelligence continues its rapid expansion, those that manage their infrastructure with the most intelligence will set the pace for the entire industry. The next leap forward won’t come solely from faster processors, but from smarter management of the systems that provide power and cooling to them.

To learn more about advanced software and control technologies transforming data centre management, read Market Insight: 8 Ways DCIM Is Evolving To Meet Tomorrow’s Data Centre Demands.

About The Author

Henry Yared

Analyst